Before the Number

Why Every Critical System Eventually Gets Measured and Why AI Is Next

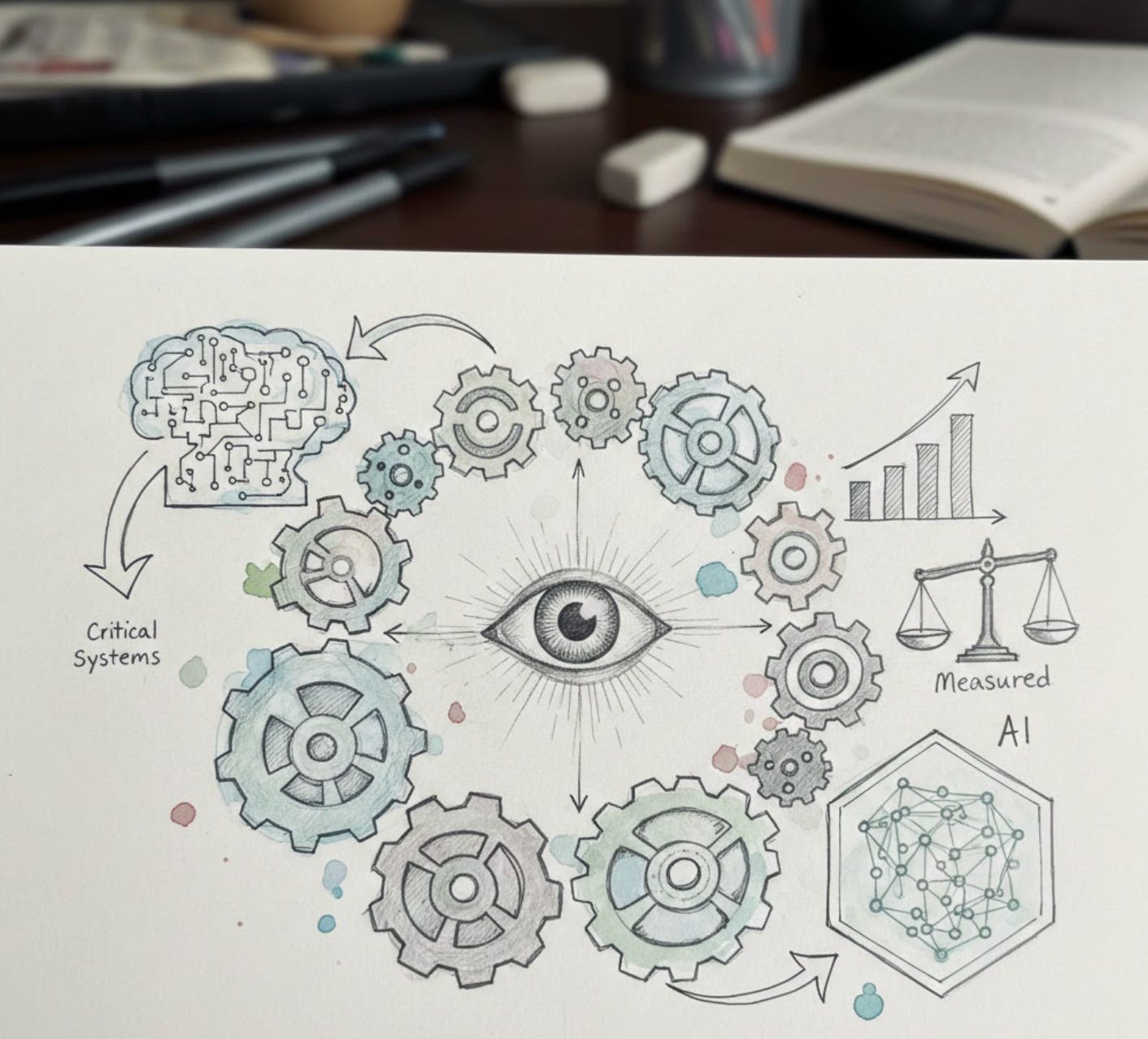

Two weeks ago, on January 22, I announced that AI governance now has a score.

Singapore released the world’s first agentic AI governance framework. South Korea’s AI Basic Act became enforceable. And on that same day, we shipped METRIS - a quantitative governance score for AI systems.

One of the responses that stayed with me came from a reader who wrote: “The distinction between frameworks and real, measurable governance is so crucial. Finally, someone speaks about dynamic AI risk.”

An AI/ML engineer called METRIS’s FICO-style approach “a total game changer for clarity” and then asked the right question: how does METRIS handle the shifting weight of different regulations as they evolve in real time?

A data engineer was more direct: “METRIS needs to be deployed everywhere, all at once. Think of the corporate goofs that could have been avoided.”

These reactions tell me the market knows it needs a number. But before I explain what METRIS does or how it works, I want to go deeper. I want to share why it needs to exist.

Because this isn’t a technology story. It’s a measurement story. And it starts with a question I’ve been asking my entire career.

The Question

I have fought ambiguity my entire professional life.

Twenty-five years across healthcare, pharma, finance, and technology. In every role, the hardest problems were never technical. They were the ones where two equally qualified people looked at the same situation and reached opposite conclusions. Where the answer depended on who you asked, what day it was, or which office you walked into.

That kind of ambiguity doesn’t just slow things down. It erodes trust. It makes fair outcomes impossible. And it creates a world where accountability is always someone else’s problem.

So I started asking a question that wouldn’t leave me alone: How did humanity solve ambiguity before?

The answer, every single time, was the same. We invented a number.

Banking Before FICO

Before 1989, getting a loan was a conversation. A loan officer looked at you across a desk. They reviewed your application. They considered your employment, your address, your references. And then they made a judgment call.

The same person, with the same income and the same history, could be approved at one branch and denied at another. The process was subjective, inconsistent, and (let’s be honest), was discriminatory. Billions of dollars were allocated based on gut feeling, personal bias, and the quality of a handshake.

Then Fair, Isaac and Company introduced a three-digit number.

FICO didn’t add technology to lending. It removed ambiguity from lending. One number. Same calculation everywhere. A 720 in New York meant the same thing as a 720 in Nebraska. Suddenly, lending decisions were comparable, auditable, and fairer than the alternative.

Today, nothing moves without a credit score. Mortgages, car loans, insurance premiums, apartment rentals. The number became infrastructure.

Medicine Before Lab Tests

A doctor feels your forehead. “You seem warm.” Another doctor examines you an hour later. “You seem fine.” Same patient. Two opinions. Who’s right?

Before quantitative diagnostics, medical decisions were based on observation, intuition, and experience. All valuable, but subjective. Two physicians could examine the same patient and reach opposite conclusions. Treatment varied not by disease, but by doctor.

Then someone measured body temperature. Then blood count. Then glucose levels. Then cholesterol, then hemoglobin A1C, then troponin levels, then genetic markers.

Numbers didn’t replace doctors. Numbers gave doctors a shared reality to work from. A blood glucose of 280 means the same thing in Asia and in America or Europe. The measurement created a common language, and with it, accountability.

Education Before Standardized Assessment

A teacher reads your essay and says “good.” Another teacher reads the same essay and says “mediocre.” A third says “brilliant.” The essay hasn’t changed. The judges have.

Before rubrics, scores, and standardized marking systems, educational assessment was entirely subjective. You can argue about whether standardized testing is perfect, it isn’t. But it made assessment comparable. A 92 means the same thing regardless of who graded it. Progress could be tracked. Gaps could be identified. Accountability became possible.

The Deeper Pattern

If you go far enough back, you find the origin of measurement itself: the moment societies decided that subjective trust wasn’t enough.

Signatures. Seals. Notarization. Weights and measures. Currency denominations. Accounting standards. Credit ratings. Safety certifications. Every one of these innovations was born from the same realization: when the stakes are high enough, “trust me” is not a system. Measurement is.

The pattern is always the same. First, a critical human activity operates on judgment alone. Then the stakes get high enough that inconsistency becomes intolerable. Then someone invents a way to measure. And the measurement becomes the new infrastructure-so foundational that within a generation, no one can imagine doing without it.

Now Look at AI

AI systems are making lending decisions. Diagnosing patients. Evaluating students. Screening job applicants. Flagging criminal suspects. Approving insurance claims. Moderating speech. Predicting recidivism.

These are the exact same domains that humanity spent centuries learning to measure. The lending system has a FICO score. The lab has quantitative diagnostics. The exam has a rubric. The contract has a signature.

But the AI that is replacing these systems? The AI that is now making these consequential decisions on our behalf?

Ask “How governed is this AI system?” and the answer you get is: a checklist. A policy document. A consultant’s opinion. A yes-or-no audit. Or worse? a shrug.

There is no number.

The Problem with Binary

The AI governance conversation today is stuck in binary. Compliant or not. Pass or fail. And that framing is the source of the paralysis.

Consider two companies.

Company A has done nothing:no documentation, no fairness testing, no monitoring, no risk assessment.

Company B has documented all its models, implemented bias testing, established human oversight protocols, but hasn’t yet completed adversarial robustness testing.

In a binary system, both fail. Same result. Same bucket. Binary made them identical. But they are not identical. One is at 50. The other is at 680. One needs a transformation. The other needs a nudge.

Binary created a market that is frozen. Companies that haven’t started say “we’re exploring.” Companies that have invested say “what’s the point?” Companies that passed say “we’re done” and stop paying attention - until the next incident.

A Score Changes Everything

A score does what binary cannot. It makes progress visible. It enables comparison. It creates continuous accountability. It creates a market that moves.

At 50, you know you’re early but you’ve started. At 400, you can see progress. At 680, you know exactly which gaps separate you from 800. At 900, you can prove your posture to your board, your regulator, your customers. And tomorrow, if your score drops because a new regulation kicked in or a model drifted, you see it immediately.

Governance isn’t pass/fail. It’s a score.

What’s Next

In my last newsletter, I announced METRIS

Today, I wanted to tell you why it exists. Because the founding insight behind METRIS isn’t technical. It’s historical. Every critical system eventually gets a number. AI’s turn is now.

In the coming weeks, I’ll share: how the scoring engine actually works, what the first assessments are revealing about the state of AI governance in the wild, and how organizations are using their score to move from “we’re exploring” to “here’s where we stand.”

If you’re building AI and wrestling with governance, reply to this newsletter. Tell me what’s broken. What’s working. What you wish existed. I read everything.

Every critical system in human history eventually got a number.

Lending got a credit score. Health got diagnostics. Education got assessments. Security got ratings. Financial health got audited statements.

AI is now the most consequential system in modern life. And it has no number.

Not yet.

Suneeta Modekurty

Founder & Chief Architect, METRIS™

ISO 42001 Lead Auditor | Sanjeevani AI LLC

A.I.N.S.T.E.I.N. is a reader-supported publication. Subscribe to follow the METRIS journey.