How AI Actually Learns

And Why It Means Data Is Everything

My friend (S) called me after reading last week’s article.

“Okay, I get that AI learns from examples instead of following rules. But how does that actually work? How does showing a computer millions of pictures teach it anything?”

I remember asking the same question years ago. I had a statistics background, understood regression, could build models. But the idea that a system could learn patterns without explicit programming took time to sink in.

Let me explain it the way I wish someone had explained it to me.

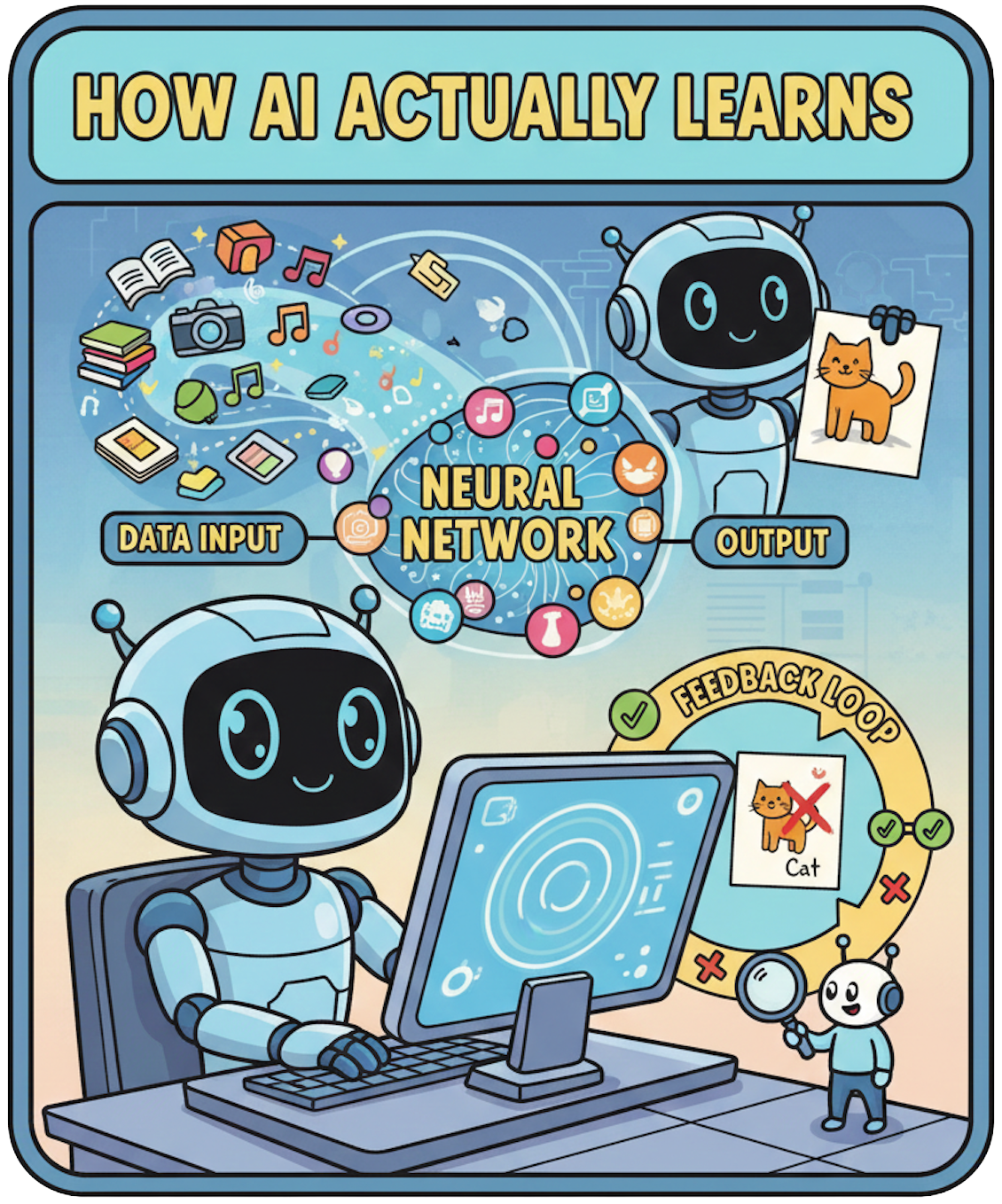

The Training Loop

Training an AI system is simpler than most people think.

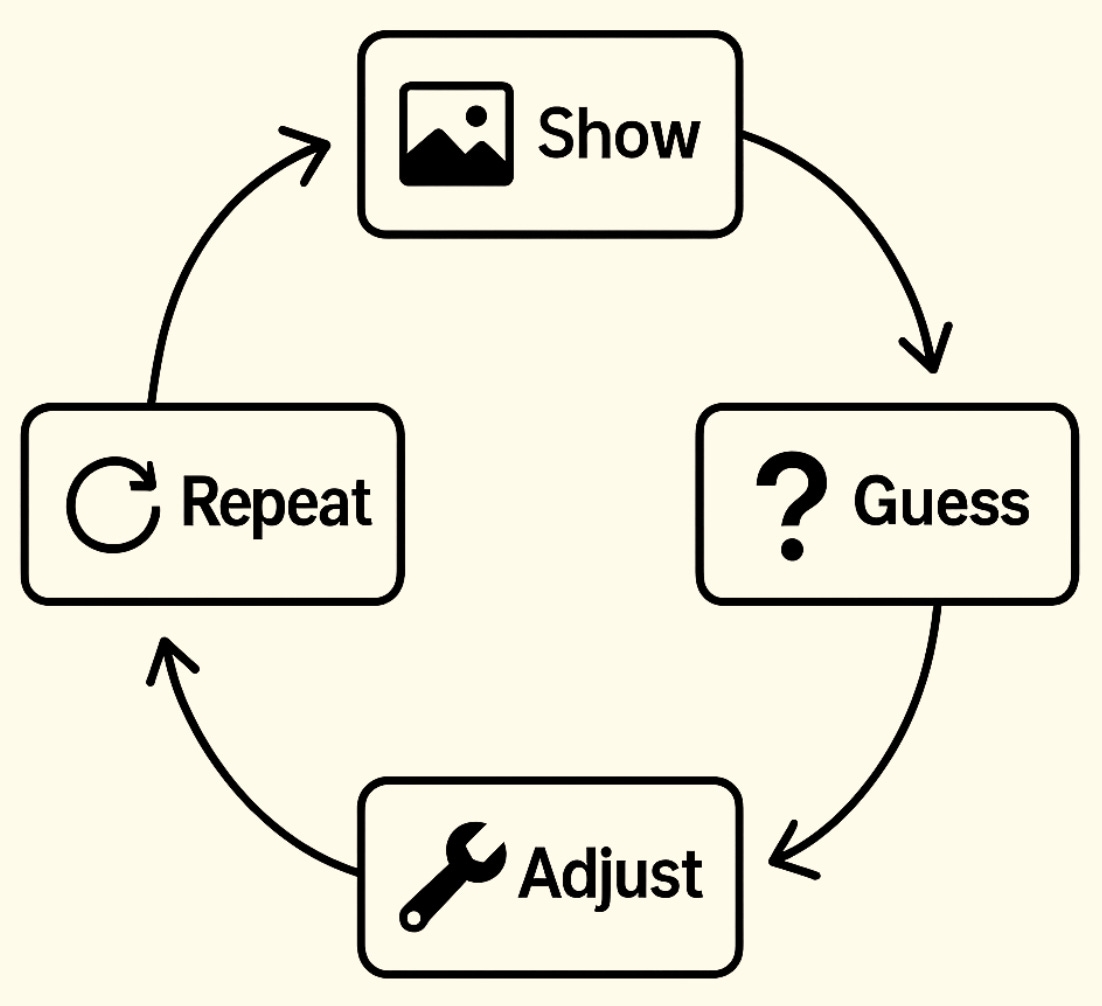

You show it an example. It makes a guess. You tell it if the guess was right or wrong. It adjusts slightly. You repeat this millions of times.

That’s it. Show, guess, adjust, repeat

Take spam detection. You show the system an email. It guesses the email is spam or not spam. You tell it the correct answer. If it guessed wrong, you fine-tune it like tuning an FM radio to get the station you want. You’re adjusting the frequency until the signal comes through clear.

Each training example is another turn of the dial. A million examples means a million tiny adjustments. Eventually, the system locks onto the right frequency. Finally, it starts recognizing patterns accurately.

Engineers call this “supervised learning.” I call it industrial-scale pattern tuning.

How to find the Right Frequency

Think of it like this.

When you tune a radio, you’re searching through noise to find a clear signal. Too far left, static. Too far right, static. You keep adjusting until the music comes through.

AI training works the same way. The system starts in noise with random guesses, no real pattern recognition. Each training example helps it tune toward the signal. “Wrong answer? oh oh, adjust left. Still wrong, it’s ok, adjust right. Closer. Closer. There…. We Gooo!”

After millions of these micro-adjustments, the system finds the frequency where patterns become clear. It can now distinguish spam from legitimate email, fraud from normal transactions, disease from healthy tissue.

The thing is, nobody programs these frequencies directly. The system finds them through repetition. Show enough examples, give enough feedback, and it tunes itself.

This is powerful. It’s also why we can’t always explain exactly what the system learned. It found a frequency that works, but describing that frequency in human terms is harder than it sounds. And it feeds heavily on data to be able to do it.

Why It Needs So Much Data

S asked the follow-up: “Why millions of examples? Can’t it learn faster?”

The world is messier than training sets assume.

Most dogs are easy to recognize. But what about a chihuahua that looks like a large rat? A mop that resembles a sheepdog? A wolf that could pass for a husky? A blurry photo taken at night?

If your system only saw a hundred dogs during training, it never encountered these edge cases. It tuned itself to recognize “typical” dogs and goes static on anything unusual.

Volume matters because edge cases matter. More data means more weird situations to tune against. Fewer surprises when the system hits the real world.

Ok, let’s talk about what you’ve experienced this yourself, even if you didn’t know why:

Your phone’s autocorrect keeps “fixing” your name or a word you use often to something wrong. The system was tuned on data that didn’t include your name or your industry’s jargon.

You travel for work, use your credit card in a new city, and it gets blocked for “suspicious activity.” The fraud detection system wasn’t tuned for your edge case. So, a sudden location change by a legitimate user.

Your voice assistant works fine for your colleague but constantly mishears you. It was tuned on voices that sound like your colleague. Your accent, speech pattern, or tone wasn’t well-represented in the training data.

These aren’t bugs. The systems are working exactly as tuned. They just weren’t tuned on enough edge cases to handle you in that situation.

I’ve seen this repeatedly. A system works perfectly in testing, then falls apart in production because the training data was too clean, too narrow, too optimistic about what reality actually looks like. The test environment was a quiet studio recording. Reality is a live concert with feedback and crowd noise.

Subscribe for one weekly, practical, jargon-free breakdown of how AI really works

Here’s the Secret

AI doesn’t “understand” anything.

When a system recognizes a cat, it’s not thinking “whiskers, pointy ears, therefore cat.” It found a frequency where certain pixel patterns correlate with the label “cat.” The ‘why’ doesn’t exist for the system. Only the correlation does.

This is both the power and the danger.

The power: AI can tune into patterns humans would never detect. It can process more examples than any person could review in a lifetime. It operates continuously without fatigue.

The danger: AI has no common sense. It doesn’t know that a drawing of a cat isn’t a real cat. It doesn’t understand context. It just matches patterns, including patterns that shouldn’t matter.

I’ve sat in meetings where executives said “our AI understands customer intent.” No. It’s tuned to predict customer intent based on historical patterns. That’s different. Understanding implies reasoning, context, judgment. AI doesn’t reason. It correlates at the frequency it was trained on.

AI’s Strengths and Blind Spots

After years working with AI, I’ve developed a rough rule.

AI tunes well for:

Pattern recognition at scale (images, text, transactions)

Finding anomalies in large datasets

Predicting outcomes from historical data

Generating content similar to training examples

AI can’t tune for:

Reasoning about cause and effect

Common sense and context

Situations that never appeared in training

Explaining why it made a decision

There’s an observation from robotics researcher Hans Moravec: what’s easy for humans is hard for AI, and vice versa.

A five-year-old can walk across a messy room, recognize grandma from any angle, and hold a conversation. These “simple” tasks are incredibly hard for AI and no amount of tuning gets you common sense.

But that same five-year-old can’t analyze a million transactions for fraud or review every medical study published in the last decade. AI tunes for that easily. Humans can’t.

AI is a tool with specific strengths. Expecting it to replace human judgment misunderstands what tuning actually achieves.

Join a growing community of leaders, founders, and practitioners learning how to build AI systems responsibly.

What This Means for Governance

Now, let’s connect the dots:

AI learns by tuning itself to patterns in data

It doesn’t understand those patterns. It replicates them

Whatever patterns exist in your data become the frequency your AI broadcasts on

What if those patterns include bias? What if the data is outdated? What if the data is incomplete? or is unrepresentative of the people you’re serving?

The AI doesn’t know. It can’t know. It tunes to whatever you fed it and treats those patterns as the correct signal.

This is why AI governance doesn’t start with the model. It starts with data.

A perfectly tuned system trained on the wrong data broadcasts the wrong signal very confidently, at scale, with no awareness that anything is off. Does this mean the system is broken? No, the system isn’t broken. It’s tuned exactly to what it was given.

If you’re responsible for AI, Data, Risk, or Compliance-get my weekly governance insights.

The Questions You Should Be Asking

Before you trust any AI system, ask:

What data was it tuned on?

How old is that data?

Who’s represented in the training set and who’s missing?

Has it been tested on data it never saw during training?

What happens when it encounters something completely new?

What are the known failure modes?

These aren’t technical questions. They’re governance questions. And in my experience auditing systems across EdTech, HealthTech, FinTech, and Insurance, most organizations can’t answer them.

They deploy the system. They trust the vendor. They hope for the best.

Hope is not a governance strategy.

Next Week

Now that you understand how AI learns, and how it tunes itself to patterns in data, we’re ready to look at what happens when that data is wrong.

Next week: The Silent Architecture of Trust, why data quality isn’t an IT problem, it’s the foundation of whether your AI works at all. We’ll look at what bad data actually looks like, how small errors compound into system-wide failures, and what governance means in practice.

Your AI is only as good as the signal it was tuned on. Next week, we examine that signal.

Suneeta Modekurty

Founder, A.I.N.S.T.E.I.N.