The Silent Architecture of Trust in AI

Data Quality Isn't an IT Problem. It's Everything

S called me again after last week’s article.

“Okay, I get it now. AI tunes itself to patterns in data. It doesn’t understand. It just finds the frequency that works. But what happens when the data is... wrong?”

That’s the question that keeps me up at night.

The Hiring Tool That Worked Perfectly

A company deployed an AI hiring tool. Fast, efficient, everyone impressed.

Then someone asked: “Why did it reject this candidate?”

They checked the system. The algorithm worked. The metrics looked accurate.

But when they traced backwards, they found the real issue: training data from 2019 that excluded entire demographics.

The AI didn’t fail. It tuned itself exactly to what it was given. It found a frequency and that frequency was biased.

This is what happens when the signal is wrong.

Data Is Evidence, Not Truth

Here’s the uncomfortable reality most teams don’t want to hear: data is not truth. It’s evidence. And evidence carries assumptions, blind spots, and biases.

Every row in a dataset represents a choice someone made about what to record and how. Those choices shape what the AI learns.

In AI systems, data plays two roles:

It teaches the model how to see the world

It judges the model’s decisions later

When both the teacher and the judge are flawed, the system has no way to know it’s wrong.

This is why governance must begin before the first line of code. Before the model. Before the architecture. At the data.

The Anatomy of Bad Data

Most bad data isn’t intentional. It’s small, everyday errors that quietly corrupt AI systems.

Inaccurate Data A salary entered as $50000 instead of $50,000. Seems minor. But now every calculation downstream is wrong.

Incomplete Data A patient’s record missing age or medical history. The model can’t learn accurate patterns from what isn’t there.

Inconsistent Data “USA”, “U.S.”, and “United States” treated as three separate values. The system sees three different countries.

Outdated Data Using 2018 customer trends to predict 2025 behavior. The world changed. The model didn’t.

Biased Data A hiring dataset with mostly male candidates. The AI learns to favor what it saw most. Not because anyone told it to but because that’s what the data showed.

Bad data isn’t just incorrect. It’s unverified assumptions treated as fact.

The Domino Effect

Remember the FM tuning analogy? The system adjusts based on every example it sees.

Now imagine what happens when flawed data enters the pipeline:

Feature engineering amplifies wrong signals

Model tunes to flawed patterns

Testing passes because the test data has the same flaws

Deployed model reinforces errors in production

Retraining locks the bias in as “truth”

Each step makes it harder to find the original problem. By you notice something’s wrong, the error has multiplied through the entire system.

This isn’t sabotage. It’s systemic inertia. And it happens more often than anyone wants to admit.

The Real Cost

McKinsey reports bad data costs enterprises 15–20% of revenue.

But the real cost isn’t money. It’s justice.

Predictive policing systems that target the wrong neighborhoods—because historical arrest data reflected biased enforcement, not actual crime.

Loan models that reject qualified applicants because missing fields correlated with demographics the system learned to penalize.

Healthcare algorithms that recommended less care for certain patients because cost data was used as a proxy for health needs.

These weren’t evil intentions. They were patterns in data, learned at scale, deployed without adequate governance.

AI governance isn’t slowing innovation. It’s protecting people from systems that don’t know they’re causing harm.

Governance: The Immune System

Think of governance as your AI’s immune system. It detects, prevents, and corrects damage before it reaches people.

Several frameworks guide this:

ISO/IEC 5259 — Data quality standards

ISO/IEC 42001 — AI Management Systems

NIST AI RMF — Govern, Map, Measure, Manage

At its core, governance answers one question: “What data caused this decision, and who is responsible?”

If you can’t answer that, you don’t have governance. You have hope.

Data as Ethical Material

This might be the most important shift in thinking: data isn’t just technical material. It’s ethical material.

Every row in your dataset represents a human life: their job application, their loan request, their medical history, their interactions with your product.

When you train an AI on that data, you’re encoding decisions that will affect people like them. At scale. Automatically. Without human review.

Clean data isn’t a feature. It’s a responsibility.

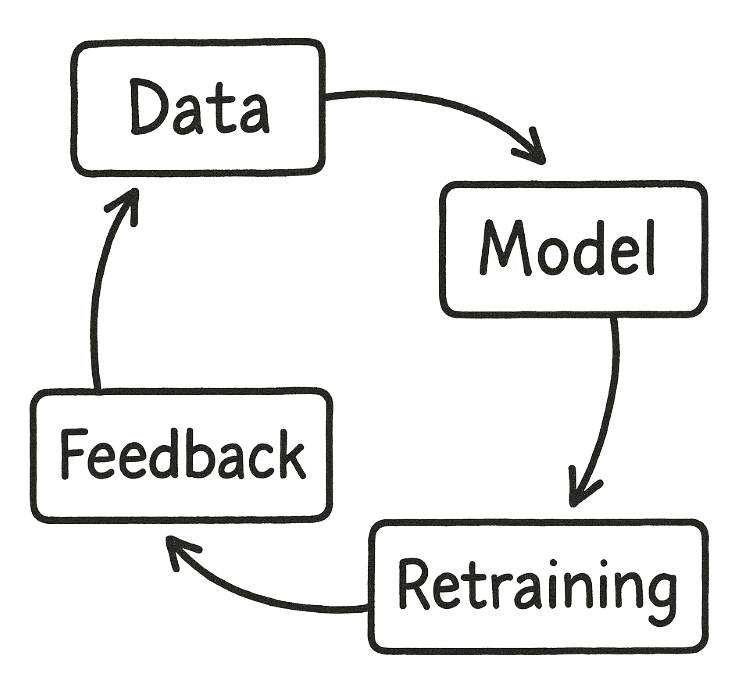

The Loop That Never Ends

Data → Model → Decision → Feedback → Retraining → Data

This loop runs continuously in every AI system. New data comes in. Models update. Decisions change.

Governance keeps this loop honest. It asks:

Where did this data come from?

What assumptions does it carry?

Who does it represent and who does it miss?

What decisions will it drive?

Who’s accountable when something goes wrong?

Without governance, the loop runs blind. And blind systems cause harm they can’t see.

Key Takeaways

For Builders:

Audit data before you architect models

Track data lineage like you track code

Build feedback loops for bias and drift

For Leaders:

Data quality is a governance issue, not an IT task

Invest in data stewardship roles

Ask “Where did this data come from?” before “What can this model do?”

For Everyone:

The AI systems affecting your life are only as good as their training data

When something feels wrong, it might be a data problem nobody caught

Demand transparency, not just explanations

A Question to Sit With

If data is the DNA of AI, what kind of organism are we creating?

Next Week

We’ve covered what AI is, how it learns, and why data is the foundation of everything.

Next week, we go deeper: specific case studies of AI failures that made headlines. Amazon’s hiring AI. Healthcare algorithms. Credit systems. What went wrong, why nobody caught it, and what governance would have changed.

Suneeta Modekurty

Founder, A.I.N.S.T.E.I.N.